Goodbye SaaS, Hello Intelligence Platforms: A Thesis Playing Out

The SaaS Era is Ending. Something Bigger is Taking Its Place.

For the last decade, SaaS was the default playbook. Recurring revenue, software margins, scalable teams, it all worked.

But 2024 marked a turning point.

We’re watching the center of gravity shift, from cloud apps to physical infrastructure, from human-scale teams to AI-native operations.

And this isn’t just theory. It’s playing out in real time:

Chinese lab DeepSeek is matching GPT-4 performance at a fraction of the compute cost

Nvidia is pulling back, not from lack of relevance, but from raw physical constraint

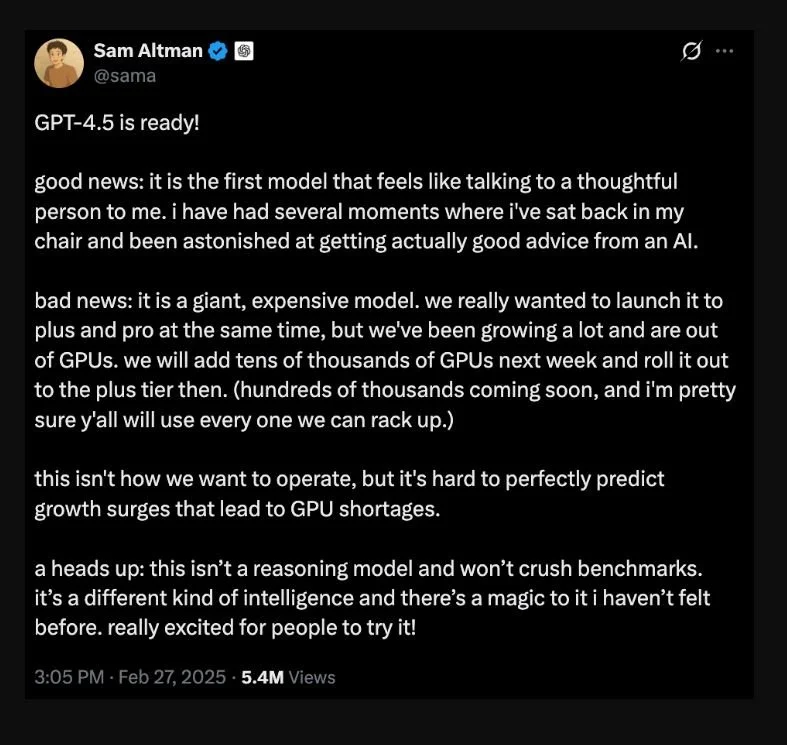

OpenAI’s Sam Altman recently dropped a blunt update: they’re out of GPUs

This isn’t a short-term market quirk. It’s the beginning of a deeper transition.

Sam Altman announces GPT-4.5: a powerful, thoughtful AI model with limited rollout due to GPU shortages.

Strategic Context: Efficiency Doesn’t Kill Demand—It Explodes It

At first glance, DeepSeek’s model breakthroughs might look like bad news for chipmakers: more performance with less compute. But that view misses the deeper truth.

This is Jevons Paradox at work, a concept from 19th-century economics that’s incredibly relevant to 21st-century AI.

British economist William Stanley Jevons observed that as coal-burning engines became more efficient, coal consumption didn’t fall, it soared. Efficiency didn’t reduce demand; it made the resource more desirable and more embedded across the economy.

We're seeing the same with compute:

Lower inference costs = higher accessibility = exponential usage

AI is now doing to compute what broadband once did to video:

YouTube didn't kill TV because it was cheaper.

It killed TV because it was everywhere, always-on, and frictionless to consume.

Now, cheaper and more efficient AI models won’t slow down GPU demand, they’ll accelerate it. Lowering costs makes AI deployable across use cases we haven’t even imagined yet.

This is the real story behind the GPU squeeze, and why we’re positioning around hard assets, not pure software.

Why We’re Rotating Out of SaaS

SaaS once promised defensibility through recurring revenue and software lock-in. But open-source LLMs and no-code tools have flattened the moat:

Anyone can now replicate multi-million dollar SaaS tools with off-the-shelf infrastructure.

Solo developers—or AI agents—can rebuild what used to require 50+ engineers.

The result? Commoditization. Margin compression. Structural fragility.

We’ve exited several software-only names in favor of businesses that:

Control physical infrastructure

Operate in capital-intensive sectors

Use AI to amplify advantages, not just to survive disruption

What This Means: Enter the AI-Soloist Economy

We believe artificial intelligence today is where the iPhone was in 2007, misunderstood, underestimated, and about to redefine productivity.

But this wave won’t look like the last. Instead of teams scaling with software, individuals will scale with AI.

We're now entering what we call the AI-Soloist Economy:

Solo founders who build and scale like 50-person teams

AI-native companies with zero marginal labor costs

Capital deployment shifting to businesses that own and operate assets the digital world still relies on

We believe the first $100M solo-run company will emerge in the next 12 months. It won’t be a fluke, it will be a new archetype.

Key Takeaways:

SaaS is being disrupted, not by new features, but by foundational shifts in cost and scale

AI efficiency = more demand, not less. Jevons Paradox is here

Infrastructure, not just software, is becoming the critical layer

The AI-Soloist Economy is real, and it’s investable

At Garden Capital, we focus on these second-order effects, where structural change gets priced in last.

We’ll be tracking this closely, especially as hardware constraints, solo-scale entrepreneurship, and AI-native infrastructure begin to reshape the investing landscape.

What’s the next part of the SaaS stack you think gets commoditized? Or what’s still defensible in a world of infinite cognition?